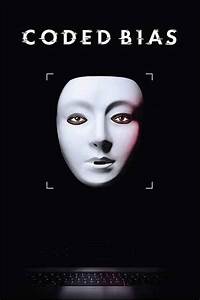

Coded Bias is a documentary film about a student at Massachusetts Institute of Technology named Joy Buolamwini, an African American female. In a Media Lab course, she was creating an inspire mirror that would show graphics on the mirror to inspire the user such as a lion on her face. The mirror used artificial intelligence algorithms for facial recognition. At first, the AI could not correctly detect her face unless she put on a white mask. Buolamwini considered potential issues with lighting or angles but reached the conclusion that it was an issue with the algorithm itself creating racial and gender bias.

The algorithmic issue sparked an interest in Buolamwini because of the discrimination towards women and people of color that do not fit the white male standard. She learned that AI began at Dartmouth College in the math department. The board in this department that worked on the project consisted of a group of all white males, so the algorithms were best fit to detect the people who created it.

Facial recognition is widely used these days. I use facial recognition without thinking about it every time I use a filter on Snapchat. I have never thought that the information Snapchat received from recognizing my face could be used in other ways. Many iPhone users with the latest iPhones use facial recognition to unlock their smartphones, but don't think about other ways this data could be utilized.

Buolamwini examined the use of AI and facial recognition in a variety of ways. She discovered examples in the United Kingdom and China where the police were using AI to find criminals on the street. She found that the algorithms showed bias toward non-white males and provided many inaccurate results that labeled individuals as criminals or at high risk for committing a crime.

She also found an apartment building in Brooklyn, New York that went from using key cards to facial recognition to enter the building. The facial recognition was also used in security camera videos to figure out who was involved in various situations. The people living in the apartment building were against the AI system because they felt uncomfortable and did not like the feeling of being watched all the time.

Many businesses also use AI in their hiring process including IBM, Amazon, and Apple. These algorithms show gender bias in the process of hiring. One example included when Amazon first started using AI to sift through applications. The algorithm was prone to gender bias and automatically deleted applications from people who listed women’s colleges, women’s sports, etc on their resume or application.

As a female applying to internships and soon to be looking for a full-time job, this information is concerning. While Buolamwini’s work has brought the issue to the eye of many employers who showed this gender bias, there are likely many companies who have no idea the bias is even happening. Does this mean that by selecting that I am a female in the demographics section when applying for a job I am potentially setting myself up for rejection?

Buolamwini’s determination throughout the film was inspiring. When she first noticed the bias, instead of complaining, she started digging. She asked questions and put in the work to get answers. When she found the answers and issues, she went to companies like IBM to present her findings. Her determination generated a change where these companies stopped using the AI technology to reduce the amount of racial and gender bias.

She also noted that she realizes she is a female woman of color and people are going to try to discredit and defund her work. Women in technology are often underestimated because they are not part of the majority, but her determination and passion drive her to push through such challenges because she is making a difference.

Information is constantly being gathered through artificial intelligence and facial recognition. We do not know how this information is being used or to what extent it can be used. Even now there are still no regulations on these algorithms, so there are no limitations. Buolamwini emphasized that we need a regulatory organization that makes algorithms work for all of society and not discriminate.

More and more types and styles of AI will continue to enter and transform our world. What will people in power do with this information? I guess we will have to wait and see.

No comments:

Post a Comment